Introduction To NPUs

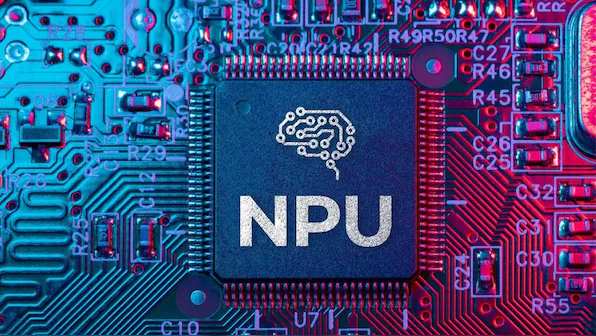

NPUs: As artificial intelligence (AI) and machine learning (ML) technologies continue to evolve, the demand for more efficient and powerful hardware accelerators has become increasingly critical. One of the most significant developments in this realm is the advent of Neural Processing Units (NPUs). NPUs are specialized processors designed to accelerate neural network computations, making AI and ML tasks faster and more efficient. This article delves into the intricacies of NPUs, exploring their architecture, advantages, applications, and future prospects.

Understanding NPUs: The Basics

Evolution from CPUs and GPUs to NPUs

- CPUs: Central Processing Units:

(CPUs) have been the backbone of general computing for decades. They are versatile and capable of handling a wide range of tasks, but their architecture is not optimized for the parallel processing required by neural networks.- GPUs: Graphics Processing Units (GPUs) brought significant improvements in AI and ML processing. Originally designed for rendering graphics, GPUs are adept at handling parallel tasks, making them suitable for neural network computations. However, they still have limitations in terms of power efficiency and scalability for specific AI workloads.

- NPUs: Neural Processing Units are the next step in this evolution. They are designed from the ground up to optimize neural network computations, offering superior performance and efficiency for AI tasks compared to CPUs and GPUs.

NPU vs GPU: Differences

| Feature | NPU (Neural Processing Unit) | GPU (Graphics Processing Unit) |

|---|---|---|

| Focus | AI and machine learning tasks | Graphics processing and general-purpose computing |

| Architecture | More cores, optimized for neural network operations | Fewer cores, more versatile for various tasks |

| Performance | High efficiency for AI workloads, lower power consumption | High performance for parallel processing, good for AI training |

| Use cases | AI-powered devices (smartphones, IoT), accelerating AI inference | Data centers, workstations for AI research, training complex neural networks |

| Availability | Integrated into specific devices, some commercial options | Widely available from multiple manufacturers |

Architectural Innovations of NPUs

NPUs incorporate several architectural innovations to maximize their efficiency and performance for neural network tasks. Key features include:

Specialized Processing Elements

NPUs consist of numerous specialized processing elements designed to execute the mathematical operations that underpin neural networks, such as matrix multiplications and convolutions. These processing elements are highly optimized for throughput and parallelism, allowing NPUs to process large volumes of data simultaneously.

Memory Hierarchy and Bandwidth

Effective memory management is crucial for the performance of NPUs. They feature a sophisticated memory hierarchy, including high-bandwidth memory (HBM) and on-chip cache, to ensure that data is readily available for processing. This minimizes latency and maximizes throughput, crucial for real-time AI applications.

Power Efficiency

Power efficiency is a significant consideration in NPU design. NPUs are engineered to perform neural network computations with minimal energy consumption, which is particularly important for edge devices and mobile applications where power resources are limited.

Flexibility and Programmability

Despite their specialization, NPUs are designed to be flexible and programmable to support a wide range of neural network architectures and algorithms. This flexibility ensures that NPUs can keep pace with the rapidly evolving field of AI and ML.

Advantages of NPUs (Neural Processing Units)

NPUs offer several advantages over traditional processing units when it comes to AI and ML tasks:

Increased Performance

NPUs are capable of performing neural network computations significantly faster than CPUs and GPUs. This performance boost translates into faster training and inference times for AI models, enabling more complex and accurate models to be developed and deployed.

Improved Efficiency

By optimizing for the specific needs of neural networks, NPUs achieve greater computational efficiency. This means they can perform more operations per watt of power consumed, making them ideal for energy-constrained environments such as mobile devices and embedded systems.

Scalability

NPUs are designed to scale efficiently with the size of neural networks. As AI models grow in complexity and size, NPUs can handle the increased computational demands without a proportional increase in power consumption or reduction in performance.

Real-time Processing

Many AI applications, such as autonomous vehicles, robotics, and augmented reality, require real-time processing capabilities. NPUs, with their high throughput and low latency, are well-suited for these time-sensitive applications, ensuring that AI systems can respond swiftly to dynamic environments.

Applications of NPUs

The deployment of NPUs spans a wide array of industries and applications, each leveraging the unique strengths of NPUs to enhance performance and capabilities.

Mobile Devices

In smartphones and tablets, NPUs enable advanced features such as real-time language translation, enhanced camera functionalities, and improved voice recognition. By offloading AI tasks from the CPU and GPU, NPUs enhance the overall user experience while conserving battery life.

Autonomous Vehicles

Self-driving cars rely heavily on AI to process vast amounts of sensor data in real time. NPUs provide the necessary computational power to handle tasks like object detection, path planning, and decision-making, ensuring safe and efficient autonomous navigation.

Healthcare

In the medical field, NPUs accelerate tasks such as medical image analysis, disease diagnosis, and drug discovery. By processing large datasets quickly and accurately, NPUs help medical professionals make informed decisions faster, potentially saving lives.

Robotics

Robots in industrial automation, agriculture, and consumer electronics benefit from the real-time processing capabilities of NPUs. Tasks such as visual inspection, object manipulation, and autonomous navigation are significantly enhanced by the speed and efficiency of NPUs.

Edge Computing

NPUs are crucial for edge computing applications, where data processing needs to occur close to the source of data generation. This reduces latency and bandwidth usage, which is essential for applications like smart surveillance, IoT devices, and augmented reality.

Future Prospects and Challenges

As the field of AI continues to advance, NPUs are poised to play an increasingly central role in driving innovation. However, several challenges and opportunities lie ahead.

Continued Innovation in Architecture

The architecture of NPUs will continue to evolve to keep pace with advancements in AI and ML. Future NPUs are likely to incorporate even more specialized processing units, advanced memory hierarchies, and improved power efficiency to handle increasingly complex models.

Integration with Other AI Hardware

The ecosystem of AI hardware includes not only NPUs but also CPUs, GPUs, and other specialized accelerators like TPUs (Tensor Processing Units) and FPGAs (Field Programmable Gate Arrays). Seamless integration and interoperability among these components will be crucial for building robust and versatile AI systems. Hybrid solutions that leverage the strengths of multiple types of processors may become more prevalent, offering enhanced performance and flexibility.

Standardization and Software Support

For NPUs to reach their full potential, robust software support and standardization are essential. This includes the development of optimized libraries, frameworks, and compilers that can efficiently map neural network models to NPU architectures. Open-source initiatives and industry collaborations will be key to achieving this.

Security and Privacy

As NPUs are increasingly deployed in sensitive applications such as healthcare and autonomous vehicles, ensuring the security and privacy of AI computations becomes paramount. Future NPUs will need to incorporate advanced security features to protect against data breaches and cyberattacks.

Ethical and Societal Implications

The widespread adoption of NPUs and AI technology brings with it ethical and societal considerations. Issues such as job displacement, bias in AI models, and the environmental impact of large-scale AI deployments need to be addressed. Policymakers, industry leaders, and researchers must work together to navigate these challenges responsibly.

Conclusion

Neural Processing Units represent a significant leap forward in the field of AI and machine learning. By providing specialized hardware designed to handle the computational demands of neural networks, NPUs offer unparalleled performance and efficiency. Their impact is already being felt across various industries, from mobile devices and healthcare to autonomous vehicles and edge computing.

As AI continues to evolve, NPUs will play a crucial role in unlocking new possibilities and driving innovation. However, realizing their full potential will require continued advancements in architecture, software support, and integration with other AI hardware. By addressing these challenges and opportunities, the future of NPUs looks incredibly promising, heralding a new era of intelligent and efficient computing.

Learn more about the ethics of artificial intelligence.

This detailed exploration of Neural Processing Units (NPUs) highlights their transformative potential in the world of AI and machine learning. With their specialized architecture and unparalleled performance, NPUs are set to revolutionize various industries, paving the way for more advanced and efficient AI applications. As technology continues to advance, the role of NPUs will become increasingly critical, driving the future of intelligent systems.